Handling of Multiple Data Platforms

The choice is yours

A customer located in business IT just told me “Whatever the technology will be, at the end it have to work for us.”. What he means is, that the experience is important. You can have all the capabilities possible today for a data & analytics platform. But if the barriers are to high, it will not create value.

Let’s Start With a Short Story

For different reasons enterprises support two or even more central data platforms. Some typical general reasons I see are:

Merger of two companies

Companies with rather decentral organisations establish a second (or third, or fourth) platform

Platforms are initial for specific reasons but grow to a all purpose data platform over time

Companies start migrations from one to another but never end it

etc.

How it started…

My customer come from a rather decentralized approach and is on the way to establish Data Mesh as a socio-technical data architecture.

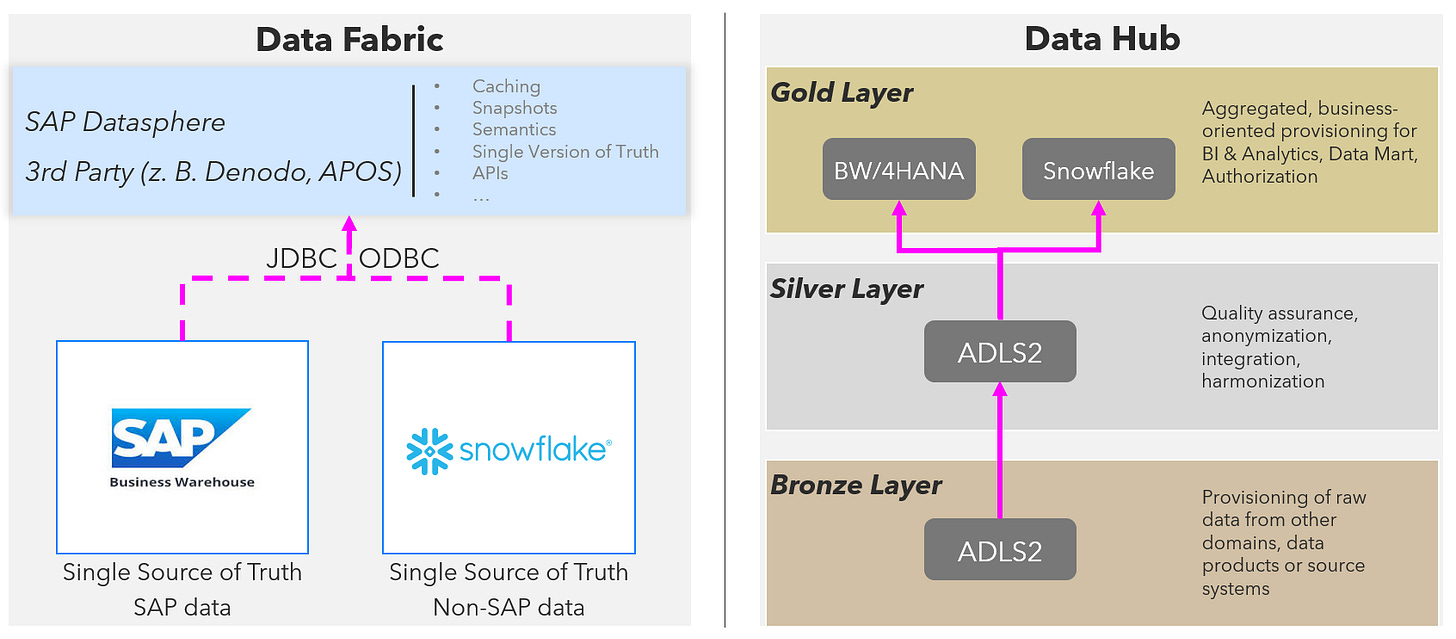

The two main platforms SAP BW/4HANA and Snowflake need same data and different approaches like direct data exchange, establishing an enterprise bus with Apache Kafka and others are discussed. On possible approach is to solve it via architectural thinking.

Fig. 1: Possible architectural solution patterns for a customer scenario

How it is going…

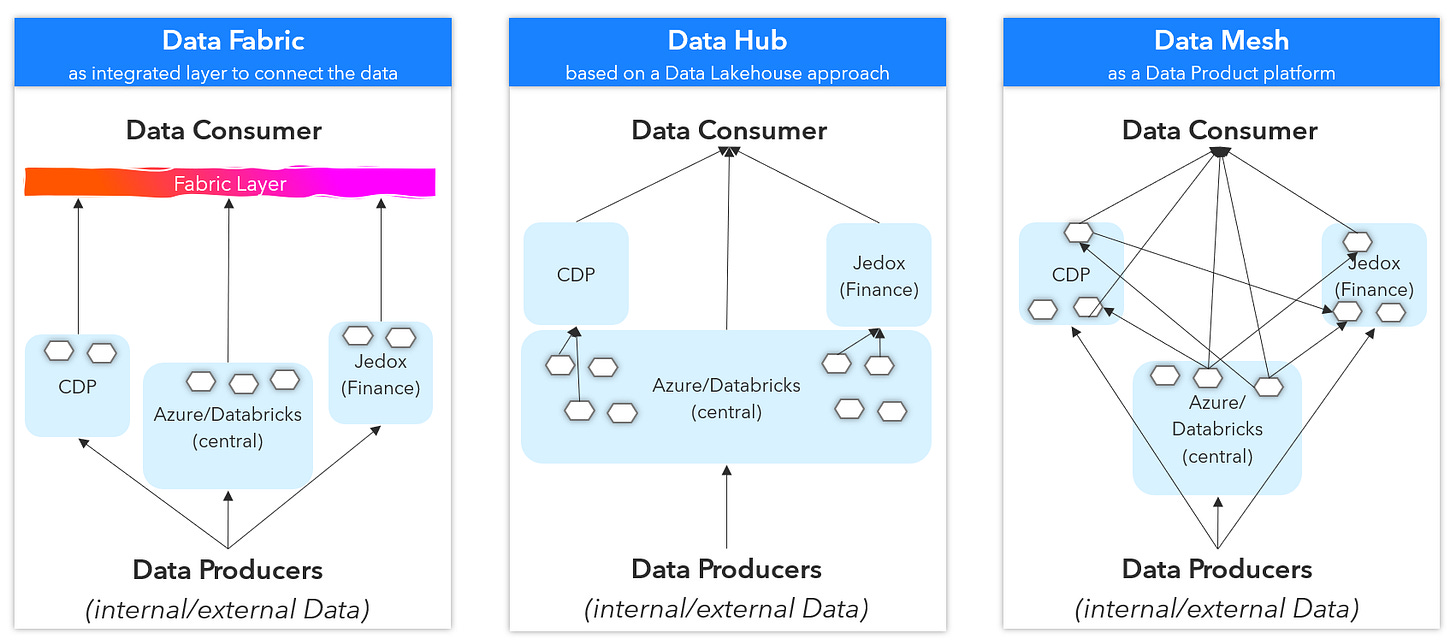

While Data Mesh already supports decentralized architectures, it can easily be combined with approaches like Data Fabric and Data Hub. While in Data Fabric data can be combined and provided from different data platforms, it sometimes comes to limitations through virtualization and when it comes to complex calculations or historizations of combines datasets. A Data Hub is a data distribution approach where Data Mesh can be used as a form of Data Hub or the Mesh is build upon a common data layer provided by the Data Hub in a multi-platform Data Mesh-approach.

Outlook

Solving complex data landscape issues with architecture can lead to a more efficient architecture and better data democratization. Direct exchange of data is always possible but leads to complex maintainance situations over time and often needs 3rd party solutions to handle bi-directional data exchange.

Look at Another Customer Situation

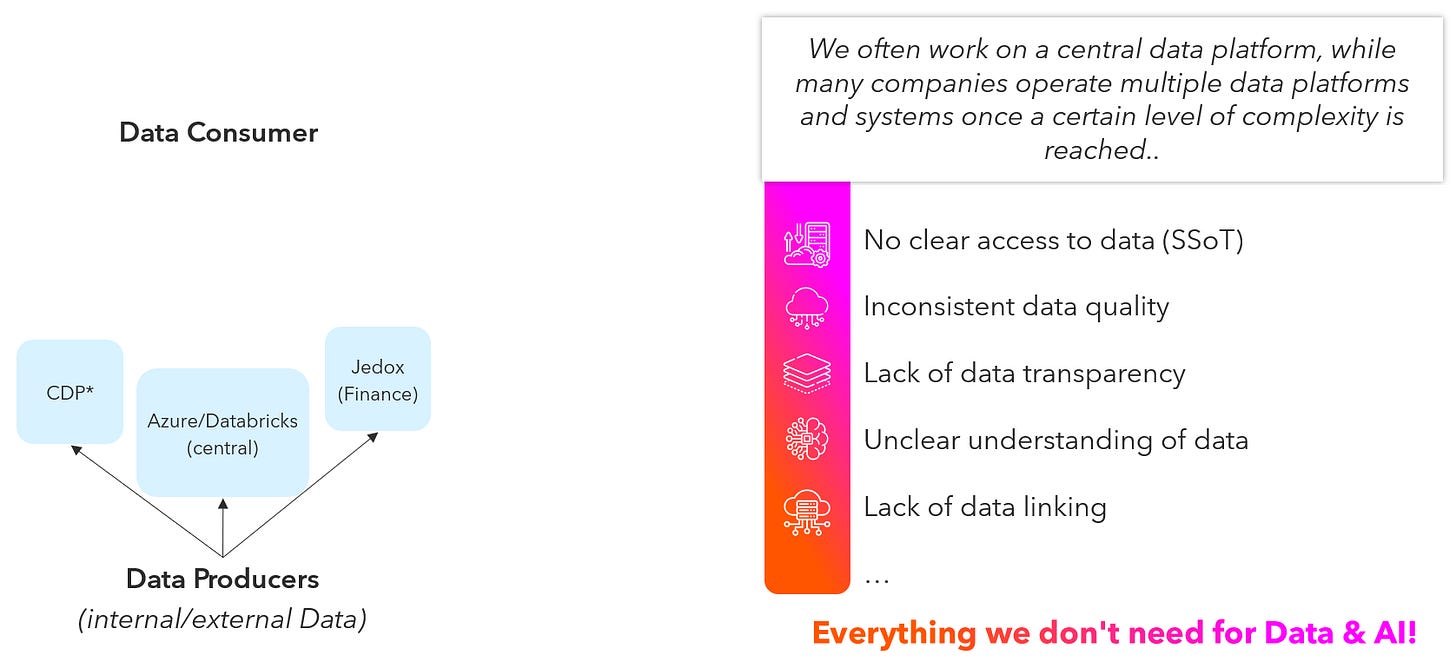

While building a new data platform we recognized that business departments build business specific data platforms independently for their needs. This adds to the above already described reasons about multiple platforms in a company and there might be several reasons for that like:

the platform supports specialized functions like a Customer Data Platform (CDP) which should be able to interact with customers and bring analytical functions into action

the platform supports functionality like corporate planning and simulation

storage and historization of data needs to be controlled in specific ways for regulatoric reasons

while a central platform is often generic for a broad range of use cases, you need something for specific user groups to support their daily work

Fig. 2: The reality of multiple data platforms for good reasons

As we can see above, having multiple platforms can happen very often but comes with many trade-offs. It is a rather typical situation, if complexity of the organization is high and data usage is increasing.

Fig. 3: Solution patterns discussed, including data product-orientation as a general idea

Similar to the first customer case, we discussed several architectural solution patterns. While every pattern has advantages and disadvantages, data products can be added in every solution patterns, especially if you already have a strong business ownership.

Opening the Field for General Directions

Let’s dive into the more general ideas beyond the general patterns to differentiate a little bit your options.

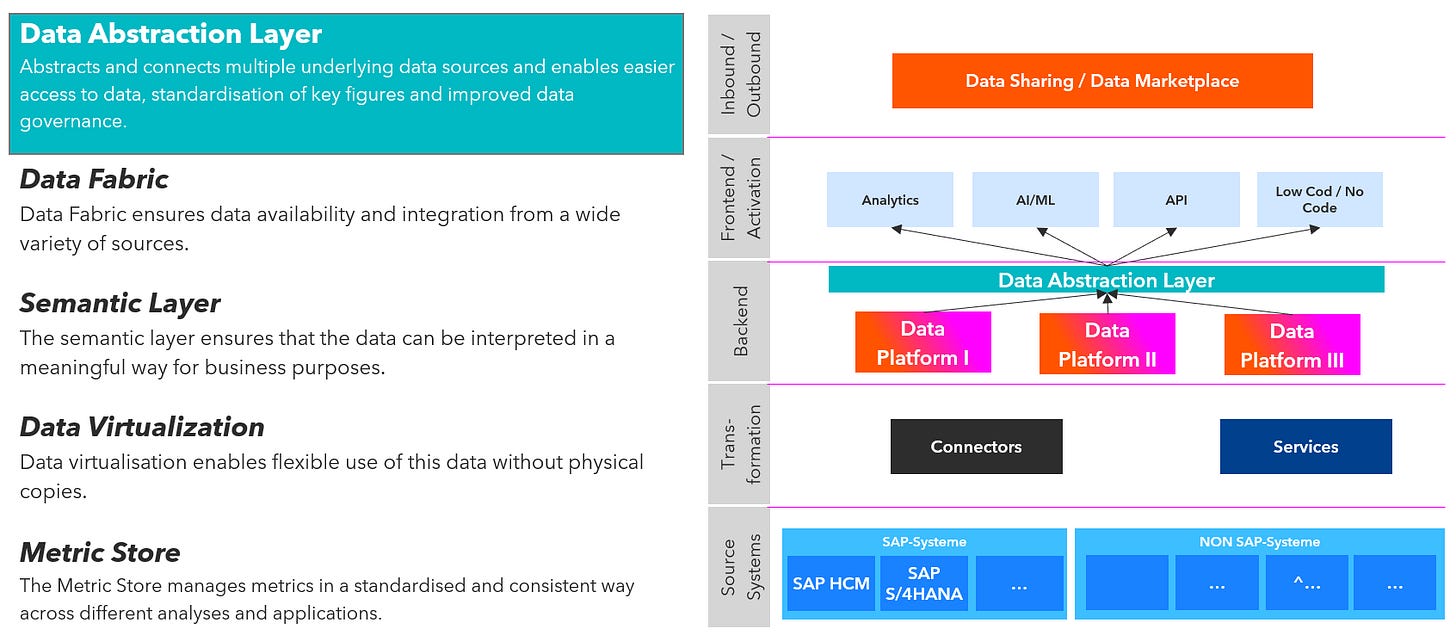

Depending on your requirements, budget and technical and organisational restrictions we can think into two direction. On is to think in what I would call “Data Abstraction Layer”. It is about making access to distributed data locations easier by connecting them. There are different ways to do that where “Data Fabric” from my opinion is the premium solution but maybe not always necessary. We should never forget, that Data Fabric can be a very complex technical approach.

Fig. 4: Different technical and approaches to solve the access to different data platforms

But sometimes there is not even the need of connecting distributed data it is rather about having an integrated access and transparency. Because while you maybe will tell me you need an integrated approach, no you want. Typically you already do it somehow. If it works and causes no fundamental problems why should you change it? There is not the one truth and maybe the promise of a integrated enterprise data warehouse with a canonical data model was the biggest lie ever, leading to high efforts, technical debts, unsatisfied users. (Read also The Truth about the “Single Version of Truth”).

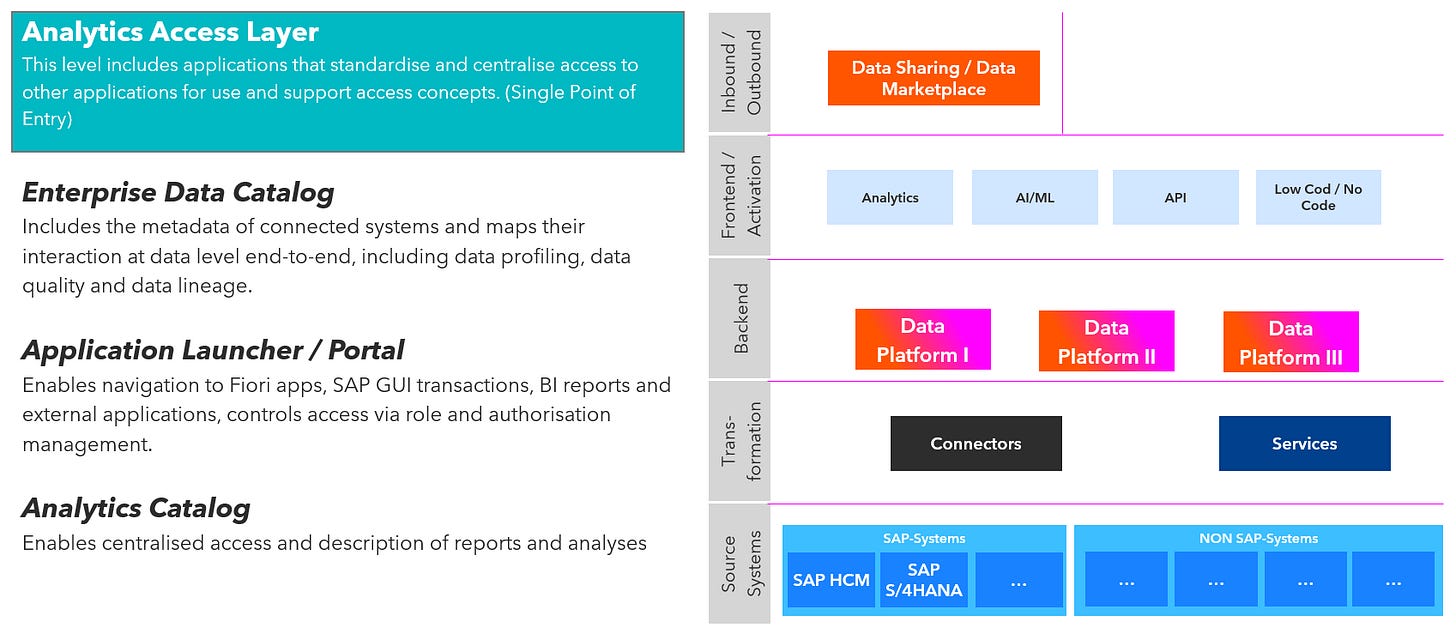

Therefore have a look about a common experience plain, giving you transparency and access to all your data assets. I would call that the “Analytics Access Layer”.

Fig. 5: Getting transparency and access of your assets through a Analytics Access Layer

We see, we have separate options here. While a Data Catalog is important and on the rise today, many companies still miss one and have no transparency. The problem is the work you need to put in.

An Application Launcher/Portal and Analytics Catalog are very similar. While the Analytics Catalog is very specific and focussed and I have not seen many successful solutions here, an Application Launcher can do the job very well and make it easier to integrate operational apps and analytical apps to fit better in the users daily work. Furthermore many organisations already have a kind of portal use SharePoint, Wikis or anything to deliver access to applications from a single point of entry.

Conclusion

While I touched many points here, the general idea is to understand different options to find the best way to bring data together to use it in a valuable way for your company. Otherwise you will do the same things (reports, integration pipelines, business logic, …) several times again, producing possibly different results. And no board like seeing the same reports with different tools showing different key figures. And today think about giving your upcoming AI agents access to distributed, individualized data often missing context and semantics. But going back to a centralized data storage architecture and organization is typically not the way.

I know in practice it is always complex. Happy to hear about your experience and solution patterns.

Great article. I can certainly relate to that and it is definitely applicable within a small to medium size organizations. For Corporations the scale is massive. I was part of organization where there were over 400 different source systems where 50 of them were part of legacy systems coming from M&A. Do you think AI will help bridge this gap or just widen it more?