AI today has to fight with high expectations and a missing deeper understanding at the same time. What should we really focus on?

Fig. 1: Overview (generated by ChatGPT)

Understanding AI and Managing Expectations

The public discourse around Artificial Intelligence (AI) frequently oscillates between extreme hype, portraying it as a universal solution, and widespread fear, depicting it as a job destroyer. This often leads to an "AI Hangover" when initial overpromises fail to materialize. It is crucial to manage expectations realistically by providing clear, practical visions of how AI can genuinely assist. Many organizations adopt AI due to hype rather than clear business objectives, which can lead to frustration.

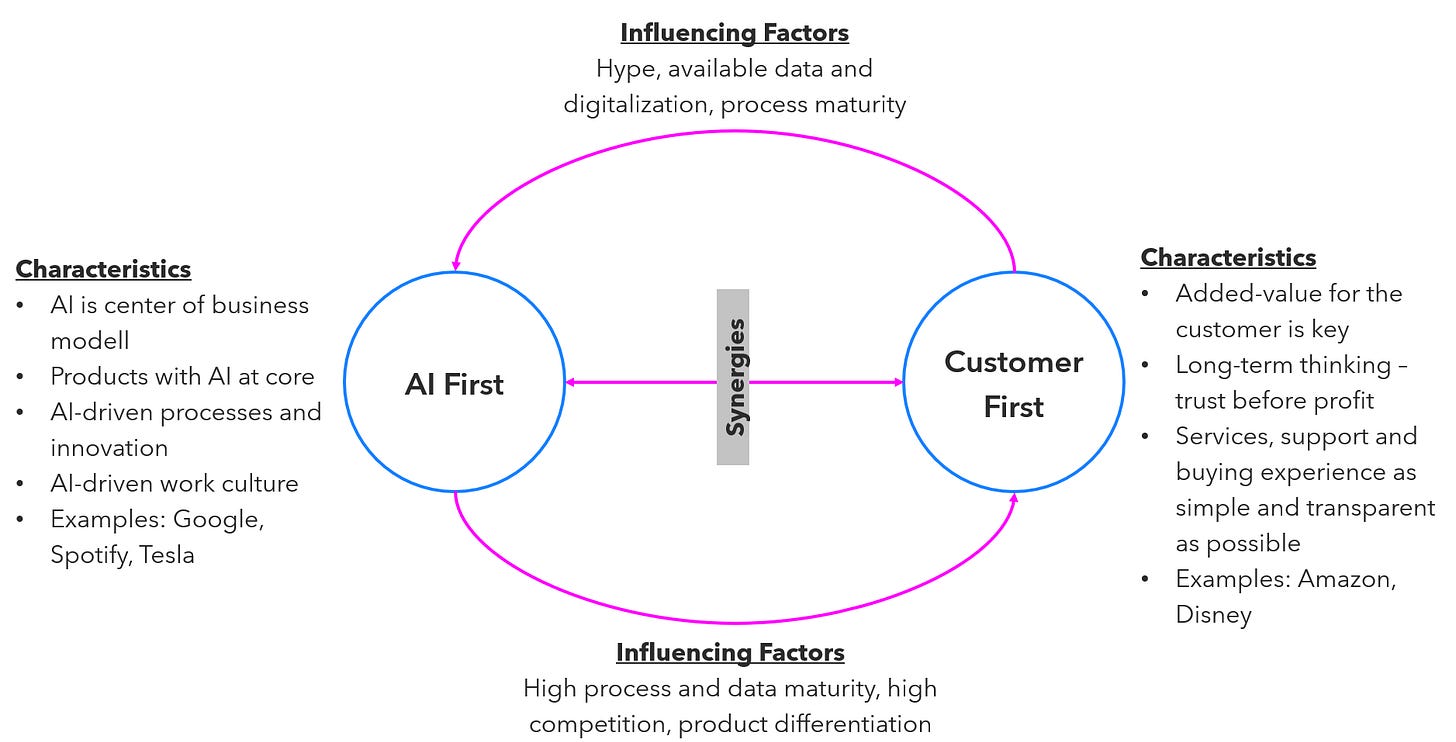

Shift from "AI First" to "Human-Centered" or "Customer First": The focus should not solely be on how AI benefits the company, but primarily on how it benefits users and customers. This involves understanding customer needs in the digital age, anticipating their interaction with AI tools, and enhancing their experience. While some entities might initially adopt an "AI First" mentality to signal innovation, this often emphasizes internal efficiency over external customer value. For example, if a company builds an AI system, it should ask how it can create a better experience for customers searching for products, not just how it makes internal processes faster.

Fig. 2: AI First vs. Customer First

Solve Real Business Problems: The primary goal of AI implementation should be to solve real business problems and support specific business goals, rather than deploying AI for its own sake. Instead of asking where AI can be used, consider routine tasks employees dislike or processes that waste time and talent; these are often ideal candidates for AI-driven automation. An example could be using AI to assist with reviewing legal documents, comparing contracts, or managing internal knowledge.

Fig. 3: Doing AI - finding the right approach

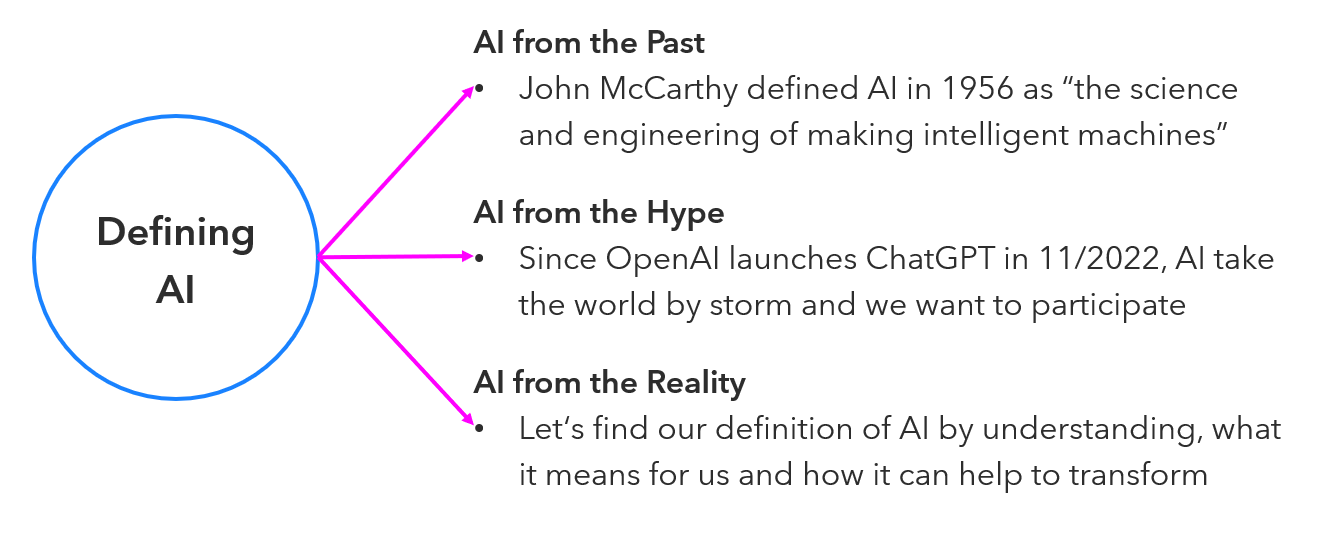

Define AI Clearly: AI is often described as a "suitcase word," meaning everyone defines it differently based on context. This ambiguity makes clear communication challenging and contributes to "AI washing"—mislabeling predictive algorithms or outsourced manual labor as AI. A lack of a standard definition can lead to misunderstandings and poor purchasing decisions. Organizations should establish their own clear definition of AI.

Fig. 4: Defining AI - It is important to find your definition

Not Every Company Needs to Be an AI Company: Just as not every company needs to be a tech company, not every company needs to transform into an AI company. AI should serve the business, not the other way around. For instance, a company focused on painting houses does not necessarily need to become an AI company.

The Critical Role of Data

There is no AI without data, and no good AI without good data. AI systems operate on data, meaning that any imperfections or biases in the input data will be amplified in the AI's output. This concept has been described as a shift from "shit in, shit out" to "shit in, bullshit out" with AI, where bad data makes results even worse.

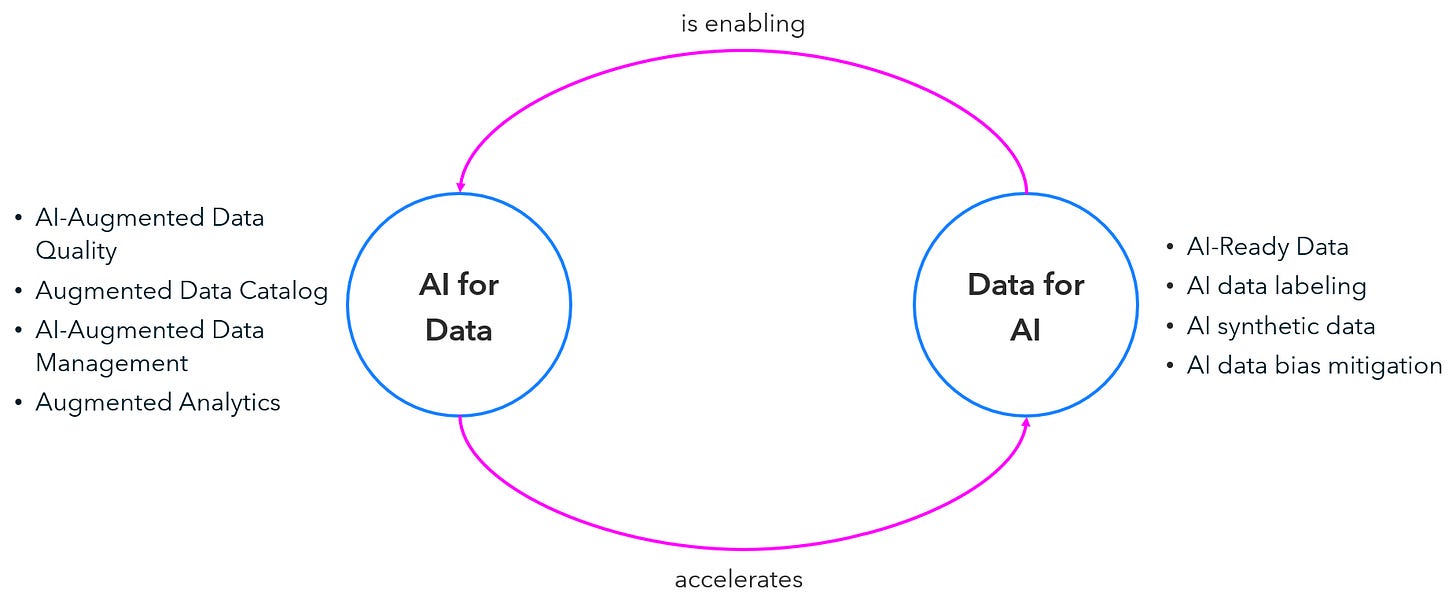

High-Quality Data is Fundamental: High-quality, clean, and representative data are fundamental for effective and fair AI systems. Organizations often face "documentation debt" regarding their data, which hinders AI development and compliance with regulations. Addressing data quality, access, and infrastructure challenges is foundational "homework" that cannot be bypassed by simply applying AI.

Fig. 5: AI for Data and Data for AI - use them together to reinforce your approach

Data Bias is a Significant Risk: AI models, especially Large Language Models (LLMs), are trained on vast amounts of data, often scraped from the internet. This can introduce and amplify human biases present in the data, leading to skewed or discriminatory outputs. Examples include gender stereotypes (e.g., male CEOs, female nurses) and racial bias in search results (e.g., historical search results showing specific public figures when searching for "gorilla"). It is incredibly difficult to remove bias once it's in the system.

Fig. 6: AI Governance vs. Data Governance - consider the strong intersection

Ethical Data Sourcing: The origin and ethical sourcing of training data are crucial. Data labeling and content moderation, often performed by low-wage workers (referred to as "clickworkers" or "taskers"), can involve exposure to disturbing content and exploitative conditions. These workers often operate under high pressure, reviewing hundreds to thousands of "tickets" (images, texts) per day for low wages (e.g., $1-3 per hour), without adequate psychological support or fair compensation. Fair compensation and psychological support for these foundational data workers are essential components of ethical AI.

Fig. 7: Respect where the data is coming from

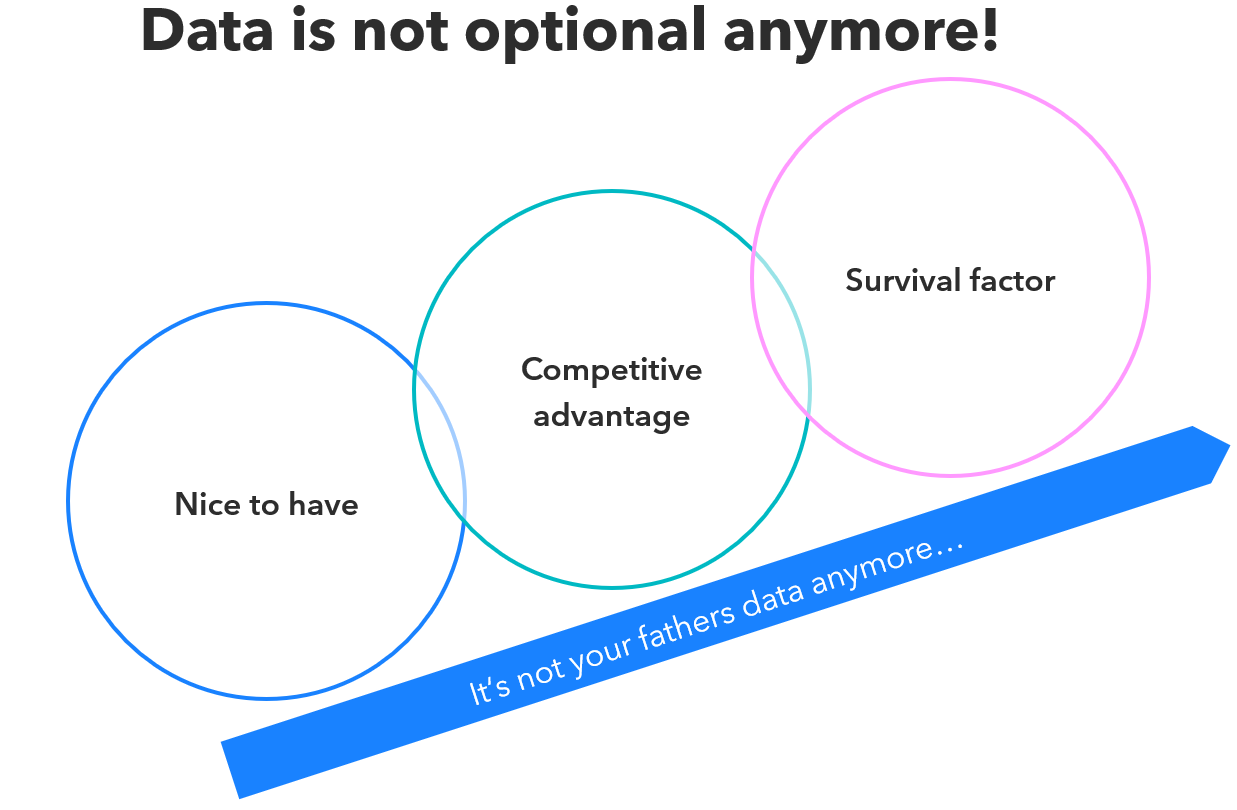

Data as a Survival Factor: In today's landscape, data has moved from being a competitive advantage to a survival factor for companies. Those who do not use their data effectively risk perishing.

Fig. 8: Data & AI is not optional anymore - it is an survival factor

Ethical and Regulatory Considerations

Regulatory frameworks, such as the EU AI Act, focus on regulating the application of AI systems, particularly high-risk ones, to protect fundamental rights, rather than restricting the technology itself.

Fig. 8: You want to win, but not at any costs

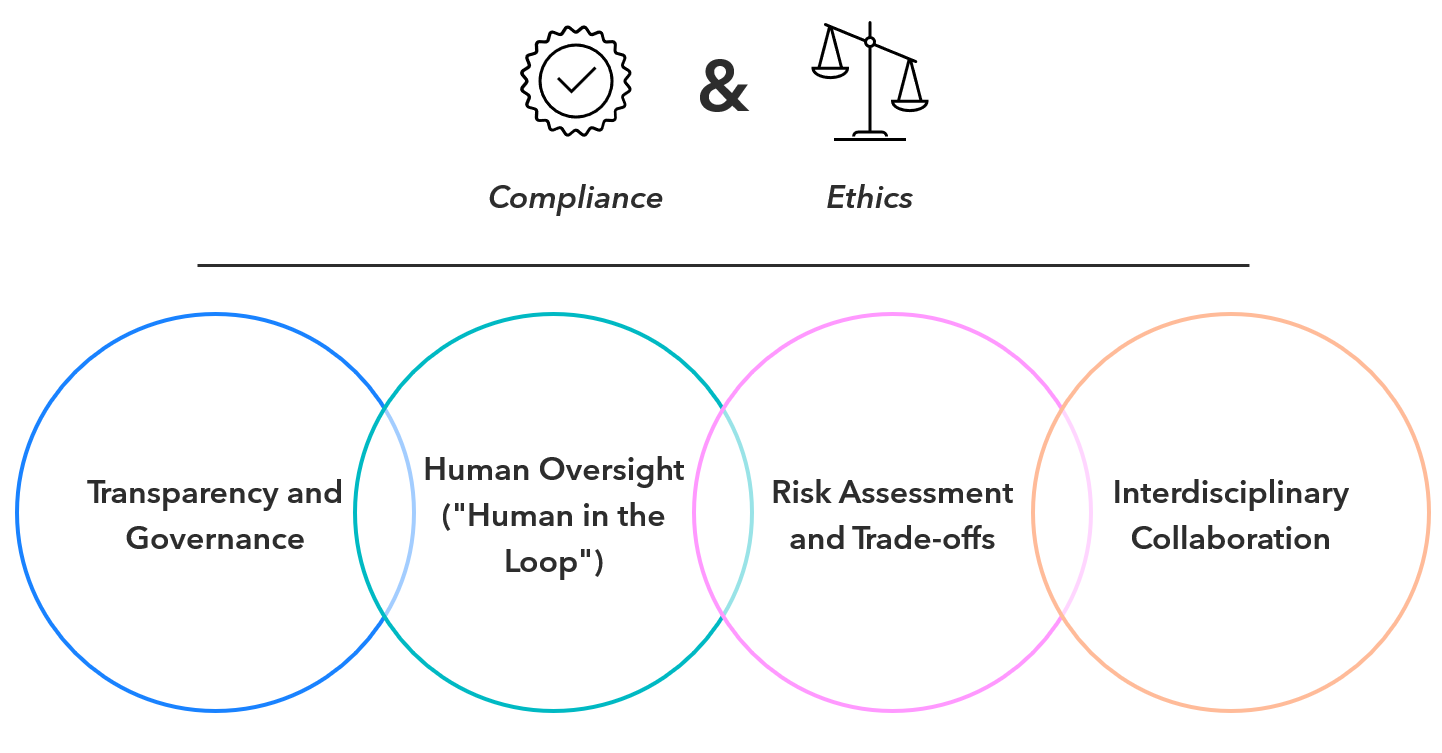

Compliance Requires Transparency and Governance: Compliance with regulations requires radical transparency, robust risk management, comprehensive data and AI governance, and ensuring models are interpretable and decisions are traceable. This includes meticulous documentation of data collection, privacy, and representativeness. The EU AI Act, in essence, demands better "housekeeping" and documentation from AI developers, addressing long-standing "documentation debt" in software and machine learning engineering.

The EU AI Act is a European Union regulation that sets rules for the development, use, and governance of artificial intelligence, aiming to ensure safety, transparency, and respect for fundamental rights.Human Oversight ("Human in the Loop"): Human oversight remains critical for validating, reviewing, and correcting AI outputs, especially in high-stakes applications where perfect accuracy is unattainable. AI models, especially LLMs, are not 100% accurate; they are designed to produce plausible, not necessarily factual, responses. For example, a doctor might use AI for a second opinion on a diagnosis, but the human doctor always makes the final decision.

Risk Assessment and Trade-offs: Implementing AI involves inherent risks across economic, legal, and reputational dimensions. Organizations must consciously weigh these risks against potential benefits. Attempting "zero risk" with AI leads to stagnation and is the biggest risk of all. Different applications require different levels of reliability; for instance, medical AI or train control systems demand much higher precision than a chatbot recommending a restaurant.

Interdisciplinary Collaboration: Designing and implementing ethical AI systems requires interdisciplinary collaboration, involving data scientists, engineers, legal experts, business stakeholders, and even ethicists or social scientists. This ensures a holistic view and addresses complex societal impacts.

Practical Implementation Strategies

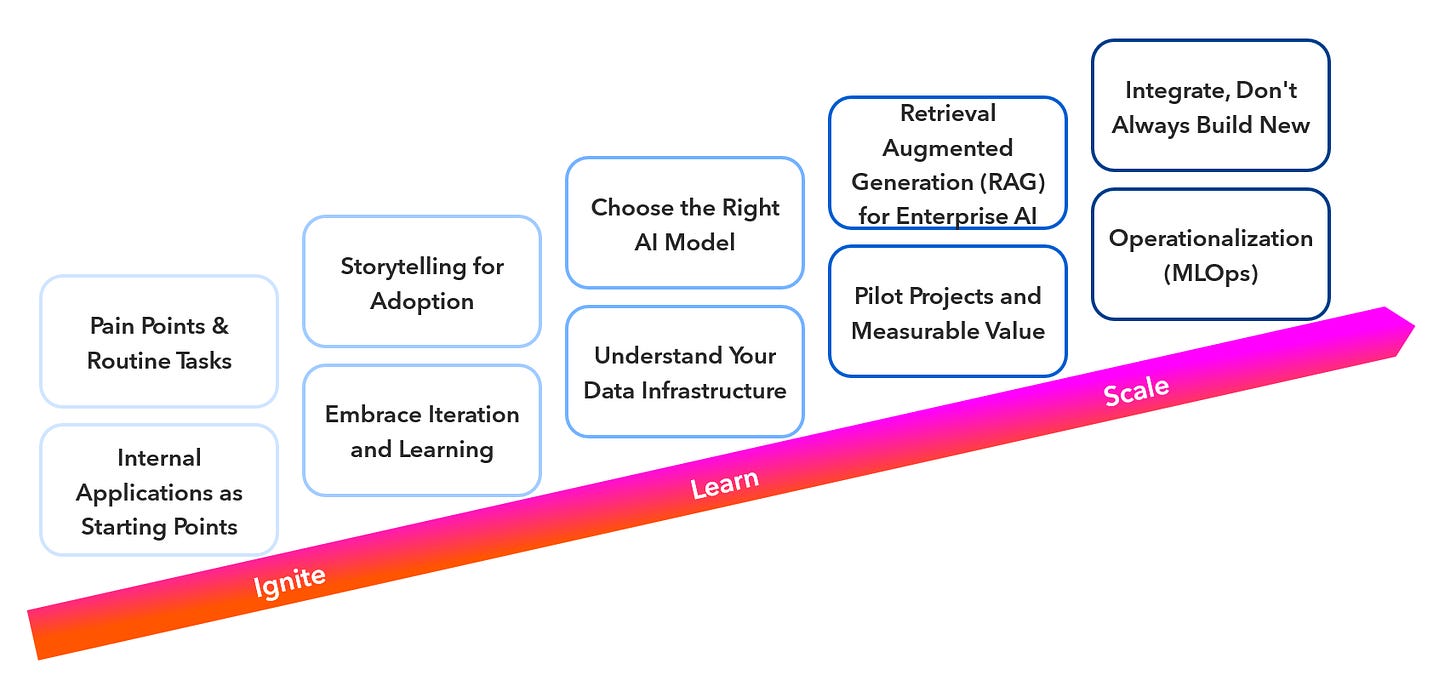

It is important to just ignite the idea of using AI in your organization. Consider a learning phase and be open to make failure. If you do so, be ready to scale and create measurable value.

Fig. 9: Ignite - Learn - Scale - trust the process and take the time needed

Start with Pain Points and Routine Tasks: Identify routine tasks that employees dislike or that waste time/talent; these are often ideal candidates for AI-driven automation. For example, an internal knowledge management system or Q&A chatbot can significantly improve efficiency by providing quick, intuitive access to internal information, answering questions about vacation days or equipment.

Pilot Projects and Measurable Value: Proof of Concepts (PoCs) should be designed with a clear path to productive implementation, not just as technical demonstrations. Focus on delivering measurable business value and involve end-users early and continuously in the development process.

Leverage Storytelling for Adoption: Stories can emotionally connect with users, making complex AI concepts relatable and fostering acceptance. Using authentic stories from early adopters within the organization can build trust and encourage wider adoption. Instead of presenting dry facts about AI's efficiency, tell a story about how a sales employee used an AI tool to improve their daily work, making it tangible and relatable for others.

Choose the Right AI Model: Not all problems require a Large Language Model (LLM) like ChatGPT. The simplest model that effectively solves the problem is often the best choice. Smaller, specialized models (e.g., for text extraction or image recognition) can be more robust, precise, and cost-effective for specific tasks than a large, general-purpose LLM. For instance, a text extraction model for specific data from PDFs might be more reliable and cheaper than an LLM for that task.

Retrieval Augmented Generation (RAG) for Enterprise AI: RAG is a powerful technique that allows LLMs to access external, up-to-date, and proprietary organizational data. This significantly reduces "hallucinations" (AI making up facts) and enables factual, verifiable answers by referencing sources, effectively grounding LLMs in company-specific "Ground Truth". RAG helps LLMs provide precise answers for specific business questions by consulting internal knowledge bases, like legal documents or product specifications. This approach is rapidly becoming a standard for enterprise AI.

Retrieval-Augmented Generation (RAG) is an artificial intelligence technique that combines information retrieval with text generation. In this approach, a model first searches external knowledge sources (such as databases, documents, or the web) to retrieve relevant information, and then uses a language model to generate responses based on both the retrieved content and its own capabilities. This improves accuracy, reduces hallucinations, and allows the system to provide more contextually grounded and up-to-date answers.Operationalization (MLOps): Building an AI solution is only half the battle; operationalizing and maintaining it (MLOps) is equally vital. Continuous monitoring of answer quality and response times is necessary for sustained user adoption. This often involves a dedicated team or a specialized solution to ensure the AI runs reliably in production.

Internal Applications as Starting Points: Internal AI applications, such as knowledge management systems or Q&A chatbots, are excellent starting points for AI adoption. They can significantly improve efficiency by providing quick, intuitive access to internal information. An example is a company using an internal Q&A bot to answer employee questions about HR policies, reducing the burden on HR staff.

Understand Your Data Infrastructure: AI models need robust data infrastructure. Many existing IT infrastructures are "heterogeneously grown" and not "AI-ready". Investing in data quality, access, and infrastructure is a fundamental prerequisite for successful AI implementation.

AI-Ready describes the state of being prepared to adopt, implement, and effectively use artificial intelligence. It refers to the necessary skills, infrastructure, data readiness, organizational culture, and ethical awareness that enable individuals, teams, or organizations to leverage AI technologies responsibly and productively.Integrate, Don't Always Build New: AI applications are often integrated as "add-ons" into existing systems rather than always creating entirely new systems. This leads to more seamless user experiences and leverages existing investments. For example, integrating AI into an existing sales system to suggest leads rather than building a standalone AI application.

Embrace Iteration and Learning: AI development is an iterative process. It's okay to experiment and fail, as long as learnings are captured and applied. Don't be paralyzed by the desire for perfection from the outset.

Evolving Skills and Mindset

Don’t invest just in technology. Soft skills will be essential for adoption and real value from AI.

Fig. 10: Skills and Mindset is crucial to be successful

Modern Leadership Skills: Skills like clear communication, delegation, and critical thinking are increasingly important for interacting with and leveraging AI systems. Leaders need to understand the technology's implications and guide their organizations through change. An anecdote highlights how a manager used "human-like" prompts or even offered "tips" to AI systems, leading to demonstrably better results (up to 13% improvement).

AI Literacy: It's crucial for individuals and organizations to gain a baseline understanding of how AI systems work. This enables informed decision-making and avoids inappropriate assumptions. Organizations should form "special ops teams" to educate themselves internally and share knowledge across the enterprise.

AI Literacy refers to the knowledge, skills, and attitudes that enable individuals to understand, critically evaluate, and effectively interact with artificial intelligence systems. It includes being aware of how AI works, recognizing its opportunities and limitations, assessing its ethical and social implications, and using AI responsibly and confidently in everyday life, education, or work contexts."Prompt Engineering" as a Key Skill: Prompt Engineering is a key skill for effectively interacting with generative AI, allowing users to guide models to produce better results. Studies have shown that treating AI more "humanly" in prompts (e.g., adding emotional context or even offering "tips") can lead to better results, similar to how human interaction affects productivity. However, it is a skill, not necessarily a standalone job, and is continuously evolving with new model capabilities.

Growth Mindset: Adopting a "growth mindset" is beneficial for navigating the rapidly evolving AI landscape, as it encourages continuous learning and adaptability

This content was selected and refinded from different talks about AI from the unf'#uck your data podcasts. Great point to dive deeper into real experiences.